Exporting Social Data for Advanced Analysis

Raw social media metrics tell you what happened. Exported datasets tell you why.

The difference between surface-level social listening and genuine intelligence lies in what you do after the data collection. When you export social data into formats compatible with statistical tools, visualization platforms, and machine learning pipelines, you transform scattered observations into strategic assets.

Introduction

Social media platforms generate millions of data points daily—engagement metrics, network connections, content patterns, and user behaviors. Most teams access this data through dashboards and real-time feeds, which works well for monitoring but falls short when you need to answer complex questions.

Why did that campaign resonate with one audience segment but not another? Which network clusters amplified your competitor's announcement? What conversation patterns precede purchase intent signals?

These questions require analysis that goes beyond what any dashboard can provide. They require exported datasets that you can slice, model, and visualize using the tools designed for serious analytical work.

This guide covers when to export social data, how to structure exports for different analytical workflows, and practical approaches to extracting maximum value from your exported datasets.

When Dashboard Analysis Isn't Enough

Dashboard interfaces excel at real-time monitoring and quick answers. Export-based analysis becomes essential when your questions involve:

Cross-Dataset Correlation

Understanding how social signals relate to external variables—sales data, market movements, competitor actions—requires merging datasets. You cannot correlate Twitter sentiment with your CRM pipeline stage inside a social listening dashboard. You need the raw data exported, timestamped, and joined with your other data sources.

Statistical Rigor

Dashboards show trends. Statistical analysis proves whether those trends are significant. When you need confidence intervals on engagement rate differences, regression models predicting virality factors, or cluster analysis identifying audience segments, you need data in formats that statistical tools can process.

Historical Pattern Analysis

Most dashboards emphasize recent data. Deep historical analysis—understanding seasonal patterns across multiple years, tracking gradual shifts in audience composition, or identifying long-term trends in content performance—requires comprehensive exports that preserve temporal granularity.

Machine Learning Applications

Training models to predict content performance, classify sentiment, or identify potential customers requires substantial labeled datasets. These models cannot train on dashboard visualizations. They need structured exports with consistent schemas.

Structuring Exports for Different Analytical Workflows

The value of an export depends heavily on how well its structure matches your analytical goals. Different workflows demand different export configurations.

For Spreadsheet Analysis

When your analysis will happen in Excel, Google Sheets, or similar tools, optimize for human readability and formula compatibility:

- Keep field counts manageable (10-15 columns maximum for comfortable horizontal scrolling)

- Use clear, descriptive column headers without abbreviations

- Export dates in formats your spreadsheet recognizes automatically

- Include engagement totals rather than requiring calculated fields when possible

For a content performance analysis, you might export: post ID, text, author, publish date, like count, reply count, retweet count, quote count, and impression count. This gives you everything needed for pivot tables and conditional formatting without overwhelming the view.

For Statistical Software (R, Python, SPSS)

Statistical tools handle complexity better than spreadsheets but benefit from clean categorical variables and consistent data types:

- Include all potentially relevant fields—you can always drop columns, but you cannot recover unexported data

- Export boolean fields as true/false rather than formatted strings

- Preserve timestamp precision for time-series analysis

- Include ID fields that enable joining with other datasets

A network analysis export might include: user ID, username, follower count, following count, account creation date, verification status, location, description, and aggregated engagement metrics from relevant posts. This enables both descriptive statistics and relationship modeling.

For Visualization Platforms (Tableau, Power BI, Looker)

Visualization tools work best with denormalized data and pre-calculated metrics:

- Include dimension fields that enable slicing (dates, categories, user segments)

- Pre-aggregate where appropriate to reduce processing load

- Export geographical data in standardized formats

- Include both raw counts and rates/ratios

For Machine Learning Pipelines

ML workflows require volume and consistency over human readability:

- Export maximum available records—model performance typically improves with more training data

- Include all available features; feature selection happens during model development

- Maintain consistent schemas across exports to enable dataset concatenation

- Preserve original text fields without truncation for NLP applications

The Export Workflow: From Query to Analysis

Effective data export follows a deliberate process that ensures you get the right data in the right format.

Step 1: Define Your Analytical Question

Before touching any export function, articulate precisely what you're trying to learn. Vague goals produce unfocused exports that either miss critical fields or include overwhelming noise.

Poor question: "What's happening with our brand on social?"

Better question: "Which user segments drove amplification of our product launch announcement, and how do their network characteristics differ from users who saw but didn't share?"

The better question immediately clarifies what you need: post interaction data, user profile data for interactors, network metrics, and comparative data for users who were exposed but didn't engage.

Step 2: Identify Required Data Points

Map your analytical question to specific data fields. For the amplification analysis above:

Post data needed:

- Original post ID and metrics

- Retweet timestamps and user IDs

- Quote tweet IDs, timestamps, and user IDs

User data needed for amplifiers:

- User IDs, usernames

- Follower/following counts

- Account age

- Verification status

- Bio text (for segment classification)

Comparison group data needed:

- Impression data (if available)

- Users who engaged differently (liked but didn't share)

Step 3: Configure and Execute Exports

With clear requirements, configure your exports to capture the necessary data. When working with large datasets, pagination becomes critical. Social intelligence platforms typically return data in pages—100 posts per page, 1000 users per page—requiring systematic retrieval of all relevant records.

For comprehensive exports, look for CSV export functionality that compiles complete datasets into downloadable files. This approach handles pagination automatically and produces analysis-ready outputs.

Step 4: Validate Before Analyzing

Before investing time in analysis, verify your export:

- Check record counts against expected totals

- Confirm date ranges match your intended scope

- Verify key fields populated correctly (no unexpected nulls)

- Spot-check a sample of records against source data

Validation prevents the frustrating experience of completing an analysis only to discover the underlying data was incomplete or malformed.

How Xpoz Addresses This

Xpoz structures its data retrieval around the export-to-analysis workflow that serious intelligence work requires.

Every major data retrieval operation—whether pulling user connections, searching posts by keywords, or analyzing engagement on specific content—includes a CSV export capability. When you query for data, the response includes a dataDumpExportOperationId that you can use to generate a downloadable CSV containing the complete dataset.

This matters because pagination limits (100 posts per page, 1000 users per page with default fields) can make comprehensive analysis tedious through API responses alone. The CSV export compiles everything into a single file ready for import into your analytical environment.

The field selection system enables exports optimized for your specific workflow. Rather than receiving every available field regardless of relevance, you specify exactly which fields you need:

For a network analysis export of Twitter followers:

fields: ["id", "username", "name", "followersCount", "followingCount", "description", "createdAt", "isVerified"]

For a content performance export of posts:

fields: ["id", "text", "authorUsername", "createdAtDate", "likeCount", "retweetCount", "quoteCount", "impressionCount", "hashtags"]

The aggregation fields available when searching users by keywords (relevantTweetsCount, relevantTweetsLikesSum, relevantTweetsRetweetsSum, and their Instagram equivalents) provide pre-calculated metrics across matching content—exactly the kind of derived metrics that accelerate analysis.

For both Twitter and Instagram, the data schema documentation in the product knowledge base provides complete field listings, enabling you to design exports that capture precisely the dimensions your analysis requires.

Practical Examples

Example 1: Competitive Content Analysis

Goal: Compare content performance patterns between your brand and three competitors over the past quarter.

Export approach:

- Export posts by author for each brand account, including engagement metrics and timestamps

- Configure exports to include: id, text, createdAtDate, likeCount, replyCount, retweetCount, quoteCount, impressionCount, hashtags

- Import all four CSVs into your analysis tool

- Add a "brand" column to each dataset before merging

- Analyze engagement distributions, posting frequency, content themes, and temporal patterns

Analytical outputs:

- Engagement rate benchmarking by brand

- Content theme analysis (via hashtag and text clustering)

- Optimal posting time identification

- Correlation between post length and engagement

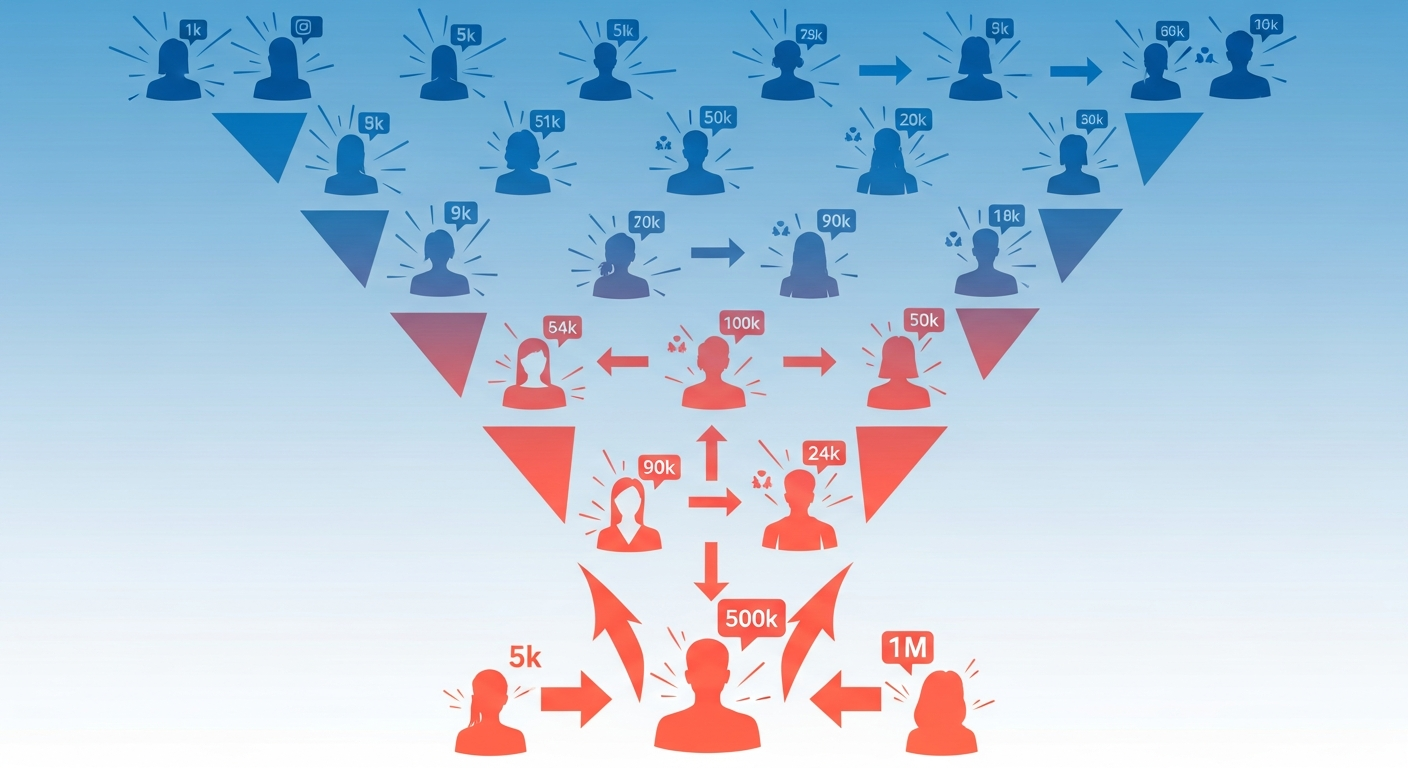

Example 2: Influencer Network Mapping

Goal: Identify and segment influencers discussing sustainable technology, understanding their network positions and audience overlap.

Export approach:

- Search for users posting about sustainable technology keywords

- Export user profiles with: username, name, description, followersCount, followingCount, relevantTweetsCount, relevantTweetsLikesSum

- For top influencers identified, export their follower lists

- Import into network visualization tool (Gephi, NodeXL, or Python's networkx)

- Calculate centrality metrics and identify clusters

Analytical outputs:

- Ranked influencer list by reach and engagement

- Network clusters representing distinct communities

- Identification of bridge accounts connecting communities

- Audience overlap analysis between key influencers

Example 3: Campaign Attribution Analysis

Goal: Understand which conversation patterns preceded measurable interest spikes in your product.

Export approach:

- Export all mentions of your product/brand with engagement metrics and timestamps

- Export user profiles for all accounts that mentioned you

- Merge with your conversion data (timestamped)

- Use time-series analysis to identify leading indicators

Analytical outputs:

- Lag correlation between social mentions and conversion events

- User segment profiles most associated with conversion

- Content themes that precede interest spikes

- Network effect measurement (how conversations spread before conversion)

Advanced Considerations for Data Export

Handling Rate Limits and Large Datasets

When exporting substantial datasets, understand the economics of data retrieval. Features like forceLatest that bypass caching increase API load and may affect retrieval speed. For historical analysis where real-time freshness isn't critical, cached data retrieval typically provides faster exports.

For very large exports (hundreds of thousands of records), plan for retrieval time. CSV export operations typically complete within 30-60 seconds, but the underlying data compilation scales with dataset size.

Data Freshness Considerations

Exported data represents a snapshot. Document when exports were generated and consider freshness requirements for your analysis:

- Historical pattern analysis: Freshness less critical; cached data acceptable

- Competitive monitoring: May require recent data; consider timing of exports

- Crisis tracking: Real-time matters; use

forceLatestwhen necessary

Privacy and Compliance

Exported social data carries responsibilities. Before exporting:

- Confirm your use case complies with platform terms of service

- Consider data retention policies for your exports

- Handle personally identifiable information according to relevant regulations

- Document data provenance for compliance purposes

Key Takeaways

-

Dashboard monitoring and export-based analysis serve different needs. Use dashboards for real-time awareness; use exports when you need statistical rigor, historical depth, or integration with external data.

-

Structure exports to match your analytical workflow. Field selection, format choices, and aggregation levels should reflect whether you're heading to a spreadsheet, statistical software, or machine learning pipeline.

-

Validate exports before analysis. Record counts, date ranges, and field completeness checks prevent wasted analytical effort on incomplete data.

-

CSV exports compile paginated data into analysis-ready files. This transforms what could be tedious API pagination into efficient bulk retrieval.

-

Pre-calculated aggregations accelerate analysis. Fields like relevantTweetsLikesSum provide derived metrics without requiring post-export calculation.

Conclusion

The gap between social listening and social intelligence often comes down to analytical depth. Teams that export data systematically, structure it for their analytical tools, and apply rigorous methods extract insights that dashboard-bound competitors miss.

Start with a specific analytical question. Map that question to required data fields. Configure exports that capture those fields efficiently. Validate before analyzing. Then let your statistical tools, visualization platforms, and models reveal what the raw feeds couldn't show.

The data is there. The export pathways exist. The remaining variable is the analytical rigor you bring to the work.